Hello my fellow Splunkers, Roman Lopez here reporting in with ringside insights into the big data wrangle. This time around I’d like to show you an obscure pro move that some thought was impossible. Let’s jump right in.

Rock Solid Alerts

Let’s set the stage by saying you need to create an alert to monitor some KPI against a list of thresholds you received. No problem, right? You effortlessly whip up a lookup file that contains the thresholds for each KPI and use the lookup command to compare the KPIs in the data with their thresholds in the lookup. Now to top it off you create an alert to execute your query every 5 minutes and set it to look back 5 minutes. Is it production-ready? Nope, like any Splunk superstar, you’ll want to check the indexing delay to make sure that the indexing latency or “lag” won’t deprive your search of the data it needs.

Indexing lag is, in essence, the time it takes for your indexers to receive new data and make it available for searching. In good environments, indexing lag will be under 30 seconds but I’ve seen environments in trouble that take up to two minutes or more.

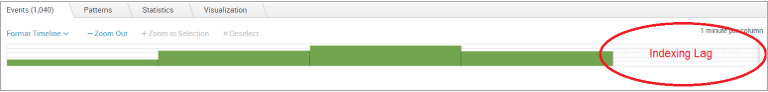

Here is what 1 minute of indexing lag looks like when you search for the past 5 minutes:

If you had set your query’s latest value to be “now” your search would be constantly missing a quarter of all KPI values and as you can guess, this means you’ll get an email later on asking why your alert didn’t trigger at the appropriate time. No Bueno…

The most common approach is to add an offset to your time window so instead of using:

earliest=-5m latest=nowYou would do this with your earliest and latest:

earliest=-6m@m latest=-1m@mThis in effect gives your indexers 1 minute of slack to receive and prepare the data for your alert to execute. The admin in charge of the environment would be tasked with reviewing the indexing lag periodically to make sure that the offsets in place still hold via this search:

| tstats count as events WHERE index=* sourcetype=* BY _time,_indextime, sourcetype span=1s

| eval indexlag=_indextime-_time

| timechart avg(indexlag) BY sourcetypeBut then, he would have to manually go into each alert and modify the search window when the indexing lag rises above the offset. Luckily this is where the pro-Splunker in you shines via a macro that will allow you to modify offsets for all searches by simply updating a lookup file:

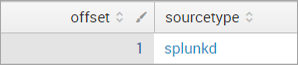

1. Create a lookup that contains a column for the sourcetype name that the alert is using and another column to hold the offset value. This is a sample lookup:

| inputlookup latency_offsets.csv2. Define a macro called latency_offset(1) to hold this query:

[| inputlookup latency_offsets.csv WHERE sourcetype=”$sourcetype$”

| addinfo

| eval earliest=info_min_time-(offset*60)

| eval latest=”-“.offset.”m@m”

| eval search = “earliest=”.earliest.” latest=”.latest

| fields search]3. Add the macro to the base search of each alert but make sure to write an entry for every sourcetype that’s in use.

The latency_offset(1) macro looks for the sourcetype you specified and subtracts the corresponding offset from the earliest and latest values used in the search. The new earliest and latest values are passed back from a subsearch to the main search via a rarely used method of passing special fields. You can now use this to periodically review and update the latency and offsets by simply updating the lookup file you created.

If you want to really automate this you could write a scheduled search to update the offsets in the lookup file based on the trends of the indexing lag without any intervention on your part! Now that’s the real stamp of a pro data wrangler.

About SP6

SP6 is a Splunk consulting firm focused on Splunk professional services including Splunk deployment, ongoing Splunk administration, and Splunk development. SP6 has a separate division that also offers Splunk recruitment and the placement of Splunk professionals into direct-hire (FTE) roles for those companies that may require assistance with acquiring their own full-time staff, given the challenge that currently exists in the market today.